The most important frontier in AI isn’t bigger models. It’s what they actually do in the wild.

illuminating the runtime.

securing intelligent systems.

Inference-time auditing that turns every AI decision into signed, replayable evidence

mission

Make AI decisions as accountable as human ones.

Today’s AI is optimized for capability, not consequences. We’re changing that by treating every decision as something that must be seen, evaluated, and proven.

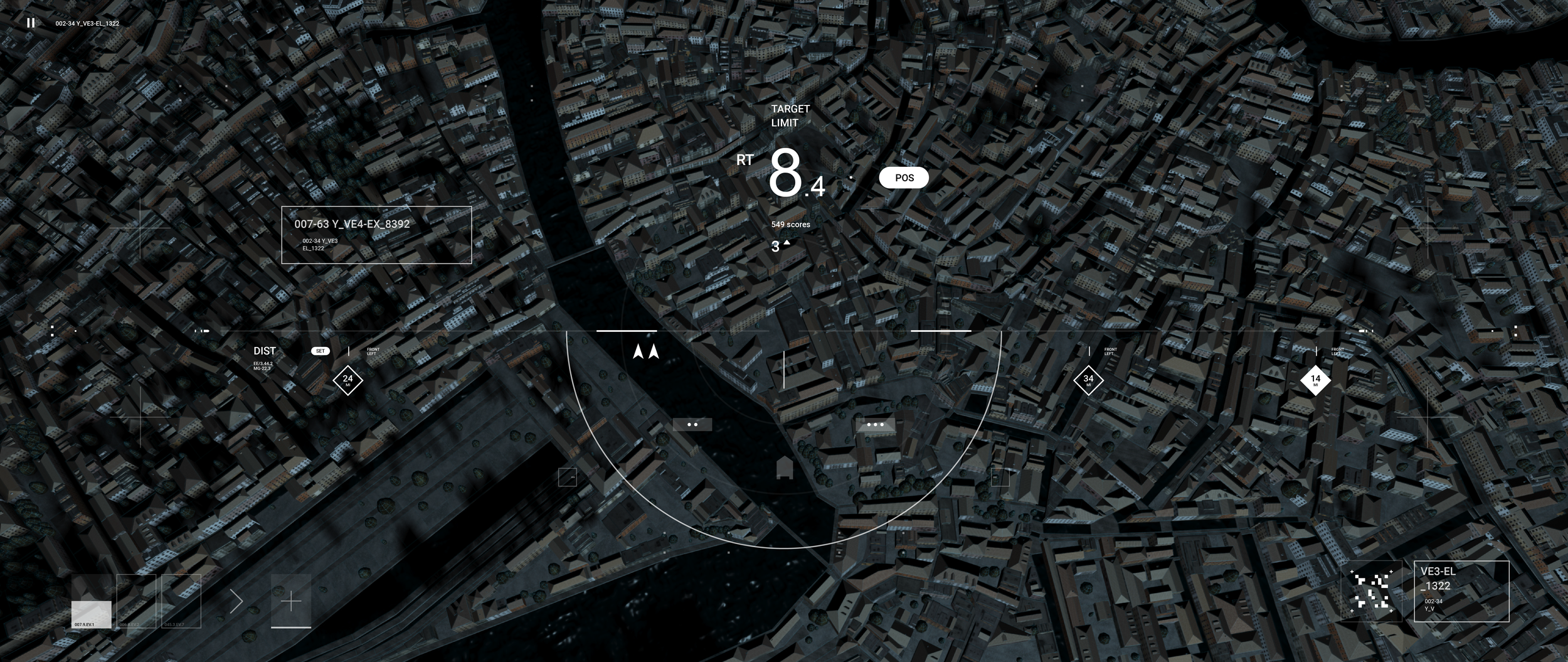

defense

Mission-fit assurance for ISR, autonomy, and C2.

Luminae gives decision-makers live, replayable evidence for every AI-assisted action.

enterprise

Audit-grade evidence for AI in finance, health, and critical infrastructure.

Every output leaves a proof you can show to risk, legal, and regulators.

ai health

AI failure rarely looks like a single bug. It’s drift: small shortcuts, hallucinations, and misaligned incentives that accumulate over time. Luminae treats AI health as a live signal, not a static benchmark… watching how behavior evolves in the wild.

-

Track how responses shift across days, weeks, and months. See when “good enough” answers start to slide into brittle or self-serving reasoning.

-

Identify recurring hallucinations, missing data patches, and other shortcuts long before they show up in headlines or incident reports.

-

Blend synthetic tests, red-teaming, and real-world traffic. Benchmarks tell you what a model did once; Luminae shows you what it’s doing now.

enforce

Live Decision Audit

Capture each model or agent decision at the moment it’s made — including inputs, outputs, reasoning signals, and key intermediate steps.

prove

Autonomy with Oversight

Keep humans on-the-loop instead of in the dark. Flag high-risk decisions, surface explanations, and give operators the context they need to trust or override.

control

Persistent Behavioral Memory

See how behavior changes over days, weeks, and months. Track drift, spot new failure modes early, and understand how real-world deployment is rewiring your AI.

platform

Luminae is an inference-time audit layer for AI systems. It sits alongside your models and agents, observes every decision as it happens, and generates cryptographically signed records that prove what occurred and why.

-

Luminae runs alongside your existing models and agents, not inside them. It observes requests and responses at the boundary, applies its checks, and emits proofs and verdicts without forcing you to retrain or rebuild.

-

Any model, any provider, any agent framework. Luminae doesn’t care whether your stack is OpenAI, Anthropic, local LLMs, or custom agents — it treats them all as decision streams to be audited and compared.

-

Every audited event is bound to a cryptographic signature. Inputs, outputs, context, and verdict are packaged as a tamper-evident record you can replay, verify, and hand to investigators, regulators, or partners.

Deployed in hours. Watching every decision in milliseconds. A single source of truth and control for AI behavior, before and after something goes wrong.

secure

Identity, isolation, and signed lineage by default.

The full packet is provided under NDA.

defense

-

Live runtime assurance for mission systems.

Monitors AI-assisted decisions in C2, ISR, and autonomy and flags high-risk behavior as it emerges, with proofs attached to every action.

-

Tactical oversight at the edge.

Keeps operators on-the-loop with real-time alerts, explanations, and replays for critical decisions made by forward-deployed models and agents.

-

Intelligence-grade analytics on AI behavior.

Correlates audited events across missions to reveal patterns, drift, and emerging threat signatures in how your AI is actually acting in the field.

-

Persistent watch on AI health.

Surfaces anomalous reasoning, hallucination clusters, and bias signatures over time so you can see when “temporary shortcuts” are becoming the system’s new normal.

-

Evidence factory for internal and external oversight.

Maps audited runtime behavior to your own policies and external standards, generating exportable proof packs from the same underlying decision records.

-

Executive view of AI risk and performance.

Shows how audited behavior tracks against your thresholds, SLAs, and mission objectives — and where trust is strong or eroding.

-

Forward-looking simulation and stress testing.

Replays and perturbs real histories so you can see how new models, policies, or autonomy levels would behave before they go live.